Sam Oliver at OpenFi: Here's what it costs to build a Conversational AI system

Sam Oliver, Founder and CEO at OpenFi has contributed a fascinating piece over at VentureBeat entitled: What does it cost to build a conversational AI?

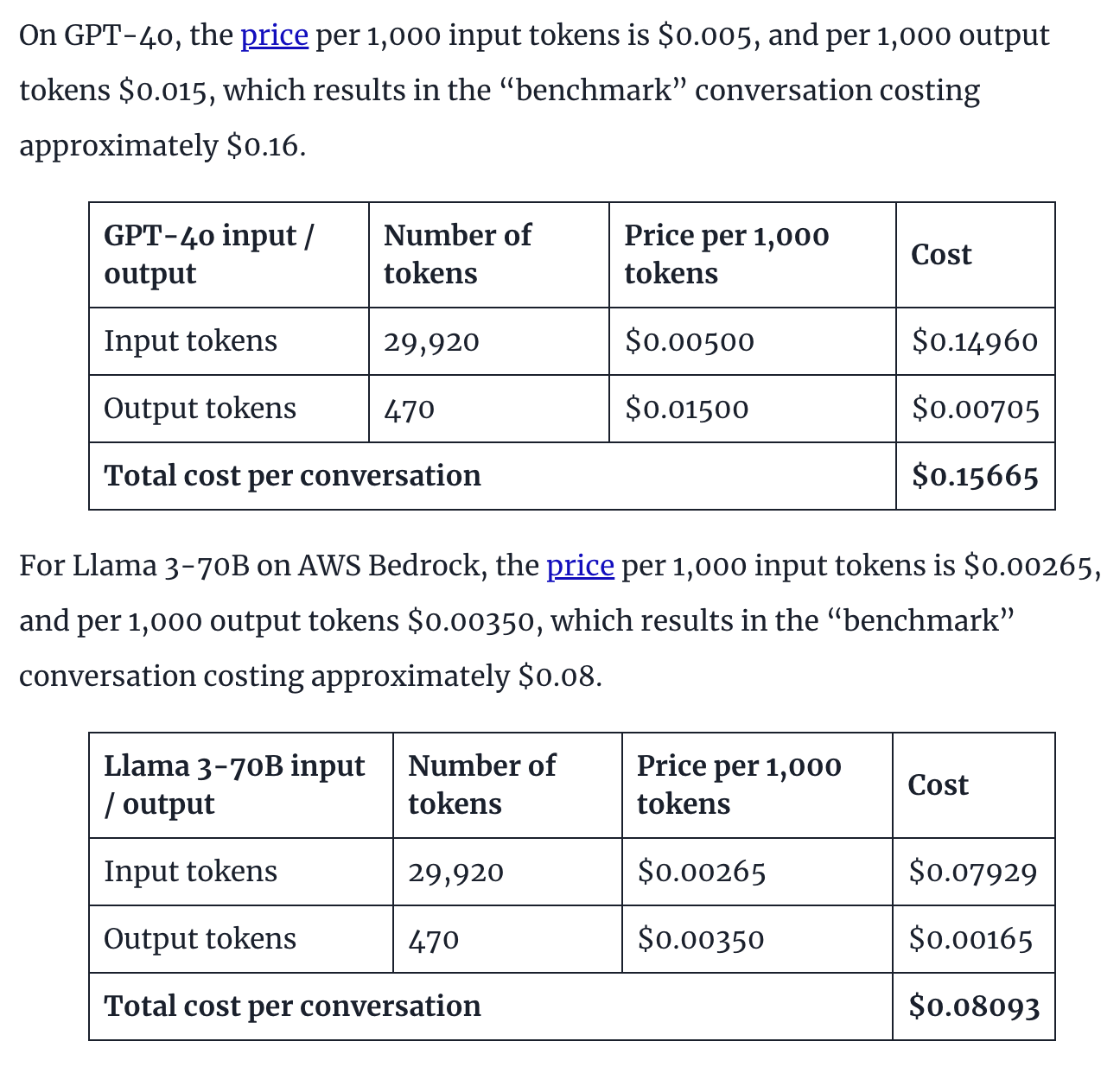

He highlights that, at a base level, OpenAi's ChatGPT costs $0.15 per conversation, whilst Meta's Llama (running on AWS Bedrock) costs almost half that at $0.08.

A 'conversation' is defined as about 16 messages sent back and forth, thus:

We assumed the average consumer conversation to total 16 messages between the AI and the human. This was equal to an input of 29,920 tokens, and an output of 470 tokens — so 30,390 tokens in all. (The input is a lot higher due to prompt rules and logic).

Here's how Sam explained the pricing:

I think being able to bring this clarity is absolutely fantastic, Sam. Yes, everyone will have different opinions and perspectives, but setting things out in such a simple, easy-to-follow manner will be really valuable for a lot of people.

Sam begins his article thus:

More than 40% of marketing, sales and customer service organizations have adopted generative AI — making it second only to IT and cybersecurity. Of all gen AI technologies, conversational AI will spread rapidly within these sectors, because of its ability to bridge current communication gaps between businesses and customers.

Yet many marketing business leaders I’ve spoken to get stuck at the crossroads of how to begin implementing that technology. They don’t know which of the available large language models (LLMs) to choose, and whether to opt for open source or closed source. They’re worried about spending too much money on a new and uncharted technology.

Read more about Sam and OpenFi:

- Sam was the first guest on our podcast.

- We also published an exclusive interview with him recently.