Kyutai's Moshi is an incredibly fast voice AI interface

Kyutai ("cute-ai") launched this week with – I think it's fair to say – a lot of impressed nodding from across the AI marketplace.

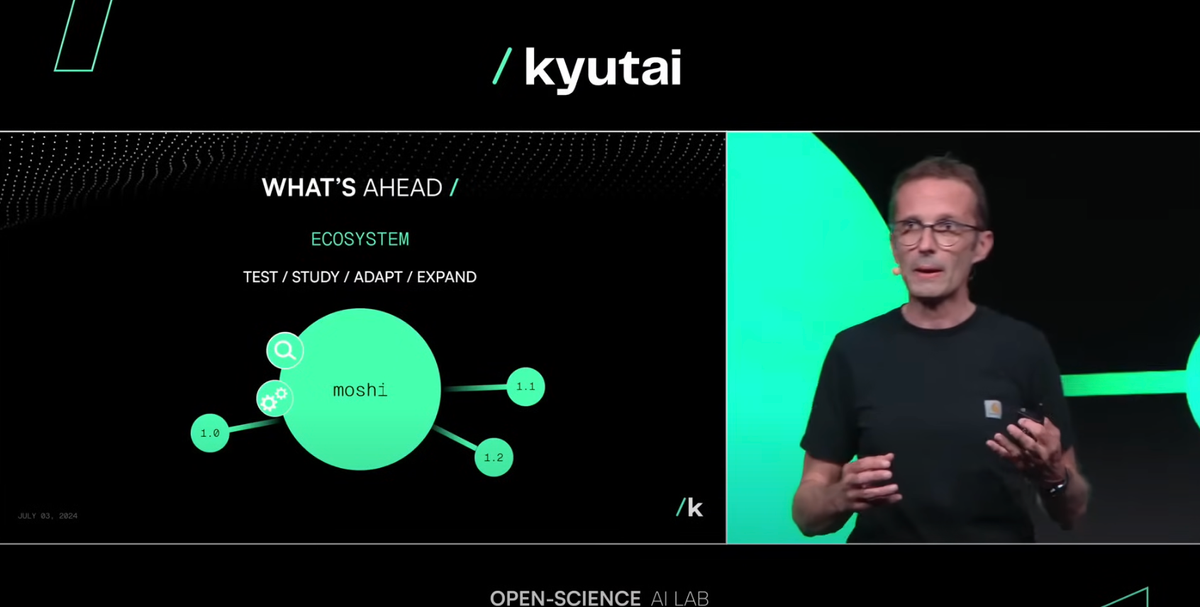

The Paris-based research labs have created their own model from the ground up to help deliver a new level of speed interaction when it comes to voice in particular. It appears from their comments that they've built almost every aspect themselves internally – including creating their own text-to-speech translation.

As the team explained in their launch keynote (see the video embed below), they're doing things somewhat differently than everyone else.

The standard model for voice interaction works more or less like this:

- The system listens to your audio input and it waits until you have finished - or there is a natural pause. Now it's got the recording.

- It then parses this audio recording into text.

- Then the large language model gets to work at trying to generate an appropriate response.

- Then it converts this text output into speech and plays this to you.

This has been fine, but even with the OpenAI 4.o demos we saw recently, there is some considerable delay in the speed. Sometimes that end-to-end journey I've outlined above can take a good few seconds before the system can reply.

This makes for quite a disjointed experience.

Moshi - the name of the Kyutai's AI model - does this processing in parallel. It's always listening so it's translating and processing your audio continually in order to deliver a much, much quicker response.

Kyutai reports that the theoretical speed of the model is 160 miliseconds, although it's generally delivering around 200+ miliseconds in real use cases such as the examples we see in their keynote.

This makes a huge different to the human experience of the technology. It means you can – theoretically – hold an ongoing conversation with the tech in a much more natural manner.

In some of the examples during the keynote, the responses and speed was remarkable.

I followed the team's invitation to try the model out on my browser earlier and - wow - it is fast. Very fast. It was also very accurate. I asked Moshi if it was raining in Tokyo and it was stupendously fast at replying 'no'.

I asked how it knew the actual weather in Tokyo. It immediately explained it had access to weather data. I then went and checked (Googled!) to see that Tokyo's weather is currently 28 degrees centigrade. Nice work Moshi.

Keep an eye on Kyutai – and definitely go and try it out yourself at moshi.chat.

Here's the press release the Kyutai team published last week:

In just 6 months, with a team of 8, the Kyutai research lab developed from scratch an artificial intelligence (AI) model with unprecedented vocal capabilities called Moshi. The team publicly unveiled its experimental prototype today in Paris. At the end of the presentation, the participants – researchers, developers, entrepreneurs, investors and journalists – were themselves able to interact with Moshi.

The interactive demo of the AI will be accessible from the Kyutai website at the end of the day. It can therefore be freely tested online as from today, which constitutes a world first for a generative voice AI. This new type of technology makes it possible for the first time to communicate in a smooth, natural and expressive way with an AI.

During the presentation, the Kyutai team interacted with Moshi to illustrate its potential as a coach or companion for example, and its creativity through the incarnation of characters in roleplays. More broadly, Moshi has the potential to revolutionize the use of speech in the digital world. For instance, its text-to-speech capabilities are exceptional in terms of emotion and interaction between multiple voices.

Compact, Moshi can also be installed locally and therefore run safely on an unconnected device. With Moshi, Kyutai intends to contribute to open research in AI and to the development of the entire ecosystem. The code and weights of the models will soon be freely shared, which is also unprecedented for such technology.

They will be useful both to researchers in the field and to developers working on voice-based products and services. This technology can therefore be studied in depth, modified, extended or specialized according to needs. T

he community will in particular be able to extend Moshi's knowledge base and factuality, which are currently deliberately limited in such a lightweight model, while exploiting its unparalleled voice interaction capabilities.

And here's the boilerplate from the CV giving an overview of Kyutai:

Kyutai is a non-profit laboratory dedicated to open research in AI, founded in November 2023 by the iliad Group, CMA CGM and Schmidt Sciences. Launched with an initial team of six leading scientists, who have all worked with Big Tech labs in the USA, Kyutai continues to recruit at the highest level, and also offers internships to research Master’s degree students. Now comprising a dozen members, the team will launch its first PhD theses at the end of the year. The research undertaken explores new general-purpose models with high capabilities. The lab is currently working in particular on multimodality, i.e., the possibility for a model to exploit different types of content (text, sound, images, etc.) both for learning and for inference. All the models developed are intended to be freely shared, as are the software and know-how that enabled their creation. To carry out its work and train its models, Kyutai relies in particular for its compute on the Nabu 23 superpod made available by Scaleway, a subsidiary of the iliad Group

You can watch the launch video here:

Very exciting indeed.

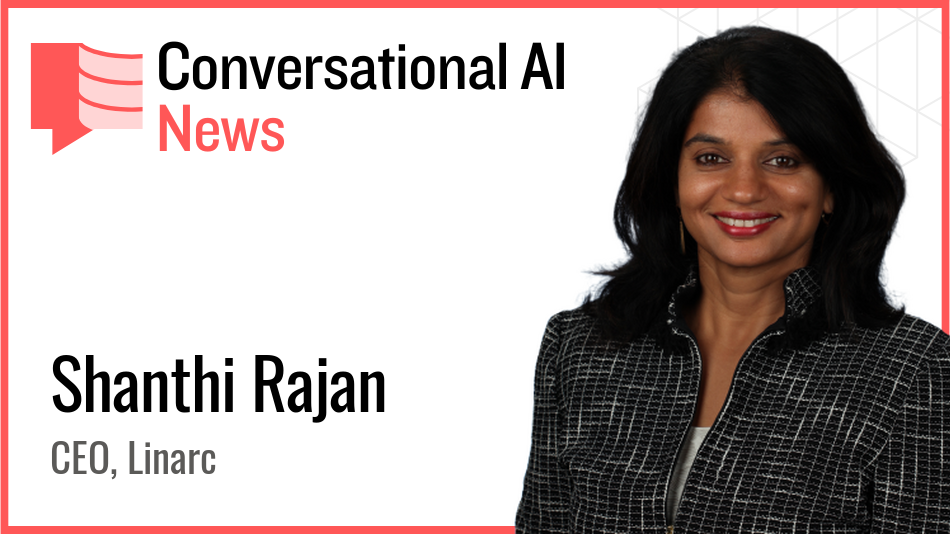

You can read more about Kyutai on the Conversational AI Marketplace.