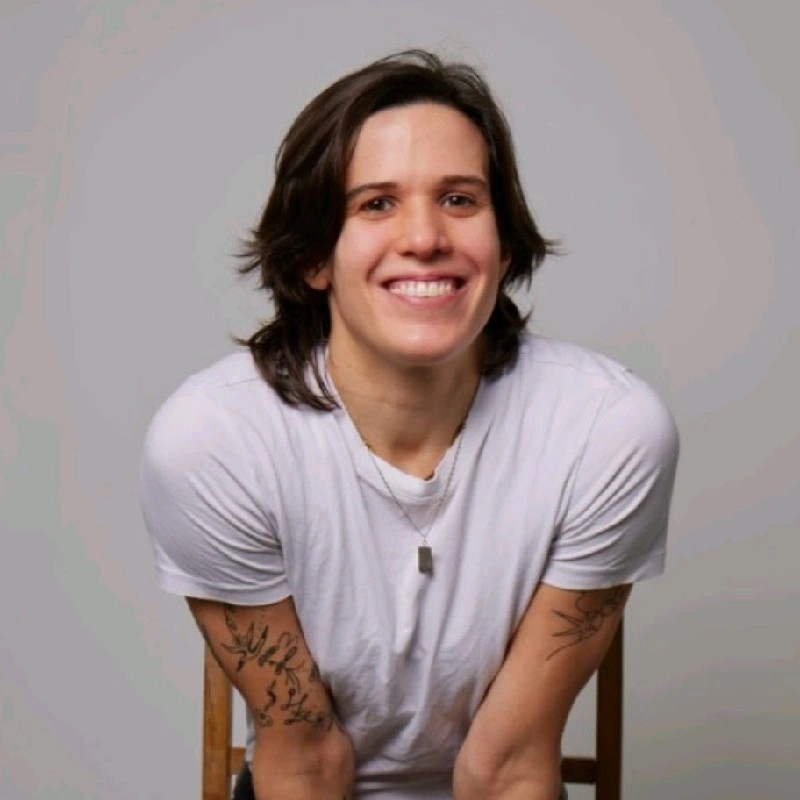

James Evans, Head of AI, Amplitude

James Evans discusses how Amplitude is embedding purpose-built AI agents into digital analytics to deliver context-aware insights and transform user behaviour analysis.

James Evans leads AI at Amplitude, where he's pioneering the use of task-specific AI agents that work continuously to improve conversion, onboarding, and monetisation outcomes. Following Amplitude's acquisition of Command AI, which he co-founded, James has been instrumental in embedding AI capabilities directly into the company's behavioural analytics platform, helping over 4,000 companies unlock actionable insights from their product data.

My questions are in bold - over to you James:

Who are you, and what's your background?

I lead AI at Amplitude, a digital analytics software company. I joined Amplitude when the company I co-founded, Command AI, was acquired by Amplitude in October 2024. At Command AI, we developed "user assistance software" — tools which embed into websites and products to make them easier for users. Our products are in the process of being packaged as Amplitude offerings, including the already released Guides and Surveys.

What is your job title, and what are your general responsibilities?

I'm Head of AI and Engagement Products at Amplitude. Day-to-day, I work with engineering and product teams to embed AI into our behavioural analytics platform. This means building agents that detect user behaviour patterns, surface insights teams wouldn't normally ask for, and improve conversion, onboarding, and feature adoption. I ensure our AI maintains customer trust through human oversight and transparent reasoning. It's about creating AI that augments human decision-making rather than replacing it, requiring constant collaboration across product, engineering, and design teams.

Can you give us an overview of how you're using AI today?

We're delivering context-aware AI agents that work 24/7 to improve outcomes like conversion, onboarding, and monetisation. Unlike generic copilots, our agents are task-specific experts embedded in Amplitude's behavioural graph. They identify what should be asked based on continuous observation of user behaviour patterns. Our agents detect subtle anomalies, form hypotheses, and test them in real-time, moving beyond static dashboards. We've built agent templates tailored to real user needs through customer collaboration. The key differentiator is our AI operates directly on Amplitude's unified data model, spotting patterns traditional analytics miss whilst maintaining transparency.

Tell us about your investment in AI. What's your approach?

Our approach prioritises measurable customer impact over technical novelty. We ask: Can this agent meaningfully reduce time-to-insight or time-to-impact? Does it help teams accomplish things they couldn't before? If yes and it's scalable, we move forward. We've built a dedicated AI team with a "build" rather than "buy" philosophy, creating purpose-built solutions that integrate natively rather than bolting on generic tools. We focus on initiatives that remove friction and unlock speed, transforming slow workflows into high-speed systems. We take calculated risks on emerging capabilities like multi-agent orchestration, but everything ties back to tangible outcomes.

What prompted you to explore AI solutions? What specific problems were you trying to solve?

At Command AI we made an early bet on how LLM AI could help guide user behaviour. When we got to Amplitude after the acquisition, we noticed a tremendous opportunity to deploy that AI to unleash the Amplitude platform more broadly. Our core bet is that the current generation of LLMs can essentially provide unlimited time to our users (as opposed to unlimited intelligence, which is how a lot of people think of AI in our space). So we ask the question – what could our users do if they could spend 1,000 extra hours a week in Amplitude? What if we shipped them a bunch of Amplitude experts to do work for them? That's what our Amplitude Agents are designed to do.

Who are the primary users of your AI systems, and what's your measurement of success? Have you encountered any unexpected use cases or benefits?

Our primary users are product, data, and marketing teams needing to understand user behaviour and drive improvements. Success is measured by reduction in time-to-insight and time-to-impact. One unexpected benefit has been experimentation velocity. Many companies operate at hyperscale but run few experiments due to setup complexity. Our early users leverage AI agents to generate experimental ideas and jump straight to approval, driving faster learning about customer preferences. We're also seeing users, particularly those less fluent with traditional interfaces, becoming skilled at prompting AI, finding text-based interactions more intuitive than navigating dashboards.

What has been your biggest learning or pivot moment in your AI journey?

The biggest learning is that building with AI is fundamentally different than building traditional SaaS. It's much more about prototyping, assessing where today's models are effective and what direction they are trending in, reacting to drops from AI infrastructure providers, and running beta tests with customers. It's less about meticulous planning and execution, because an annual roadmap has to get re-evaluated essentially weekly. This took us a few months to figure out and train our entire PD org to function in this way.

How do you address ethical considerations and responsible AI use in your organisation?

Customer trust is built into our architecture. Users set autonomy levels and guardrails for every AI agent, nothing customer-facing happens without human approval. We're committed to human-in-the-loop models where AI augments rather than overrides judgement. We don't treat data as a black box. Our agents show their reasoning, surface evidence, and invite feedback. We use data transparently within customer consent limits.

What skills or capabilities are you currently building in your team to prepare for the next phase of AI development?

We hire people who are technically strong but deeply curious about solving real customer problems. Technical skills can be developed, but genuine curiosity about user needs is harder to teach. We're building capabilities in multi-agent orchestration, enabling teams of AI agents to collaborate towards complex goals. We're developing expertise in autonomous decision-making systems that suggest, test, and validate changes with minimal human input. Beyond technical skills, we foster experimentation and fast iteration cultures. We focus on early alignment, cultural onboarding, and giving people autonomy over meaningful work, enabling collaboration across teams whilst exploring multimodal inputs and richer feedback loops.

If you had a magic wand, what one thing would you change about current AI technology, regulation or adoption patterns?

I'd eliminate the obsession with flashy, standalone AI features and redirect focus towards embedding AI into core functionality where it delivers tangible value. The industry is too caught up in "wouldn't it be cool if" thinking without asking whether it actually solves customer problems. There's also a fundamental misunderstanding about AI's role, it should augment human capabilities, not replace them. I'd change the narrative from "AI versus human" to "AI enabling human empathy at scale." I'd also address companies getting lost in pricing concerns for AI products. People spend too much time worrying about model costs instead of focusing on product-market fit.

What is your advice for other senior leaders evaluating their approach to using and implementing AI? What's one thing you wish you had known before starting your AI journey?

Start with data you know you can use legally, then build from there. Don't design AI products and frantically scramble for data afterwards. Sometimes this means being less ambitious initially, but ensures solid ethical ground. Be transparent with users about value exchange. When people understand the tangible benefits of sharing data, they're often comfortable doing so. Focus on removing friction and unlocking speed rather than chasing impressive technology. AI should solve real problems within existing workflows, not create complexity. One thing I wish I'd known: enterprise AI product timelines have longer apertures than model release cycles. Build expecting models to improve significantly by launch.

What AI tools or platforms do you personally use beyond your professional use cases?

I'm a heavy user of ChatGPT for rapid prototyping, it's incredible for testing whether ideas have legs before investing development resources. I paste concepts in to see if they produce reasonable results, validating product directions quickly.

What's the most impressive new AI product or service you've seen recently?

I'm fascinated by UI prototyping tools like Lovable and Bolt. They exemplify what happens when you find AI's natural strengths rather than forcing it into predetermined use cases. Nobody was saying "UI prototyping is the number one thing AI will excel at," but these teams discovered it, refined it, and now everyone loves using them. What impresses me most is their approach, they weren't afraid to build and iterate in the open until genuinely good. This contrasts with typical enterprise approaches of extensive customer discovery before building. The common thread in impressive AI products is solving real, high-friction problems with clear value propositions rather than showcasing technical capabilities.

Finally, let's talk predictions. What trends do you think are going to define the next 12-18 months in the AI technology sector, particularly for your industry?

Language-based interfaces will become standard entry points for complex applications. We're seeing users, especially those less comfortable with traditional software interfaces, become more skilled at prompting AI than navigating dashboards. This will fundamentally change how analytics platforms are designed. Multi-modal AI capabilities will mature significantly, enabling analysis of not just text but broader session data and user behaviour patterns, unlocking more contextually relevant insights. The industry will move away from generic copilots towards purpose-built, outcome-driven agents. Companies embedding AI into core functionality rather than treating it as bolt-on features will gain competitive advantage. Finally, experimentation velocity will accelerate dramatically as AI removes friction from hypothesis generation and testing.

Many thanks to James Evans for taking the time to share his insights with Conversational AI News. You can connect with James on LinkedIn or learn more about Amplitude's AI-powered analytics platform at amplitude.com.